Music From The Heavens - Moving Now Music To A Kubernetes Cloud

Watch video (15:11)*** This post was written in combination with a talk at an @McrLaravel meetup. Half a poorly recorded video of that talk can be found above (laptop battery ran out) and associated slides can be found here. ***

For the last four years I've been working with @nowmusic to build a streaming app to complement their endlessly successful compilation CD product. Over the last six months we've been going through a bit of a change with our tech stack and in this post I'll be exploring some of the reasons why that's been necessary along with a few ways that Laravel has fit into the story.

As a little context: Now Music can be found in the iTunes, Google Play and Amazon stores. It's has the majority of the functionality that you'd expect from a modern streaming platform with a couple of exceptions due to a lower price point. It houses the vast majority of the Now back catalogue along with a regularly updated Charts feature, a dynamic radio playlist generator and the ability to save tracks for offline playback. The whole platform has been built from the groud up. so ono white label streaming solution has been used - we do all of the integration with content providers ourselves.

As for technologies, we're currently native on the front end (Swift and Java) and use mostly PHP, specifically Laravel, on the back end. We're also hosted on AWS which handles all of our scaling. We also rely on quite a few of their additional services such as SQS, ElasticTranscoder, S3 etc for additional functionality.

So I mentioned we were going through a bit of a change at the moment. Before I get into exactly what those changes are I want to put a little bit of context around why they are necessary in the first place...

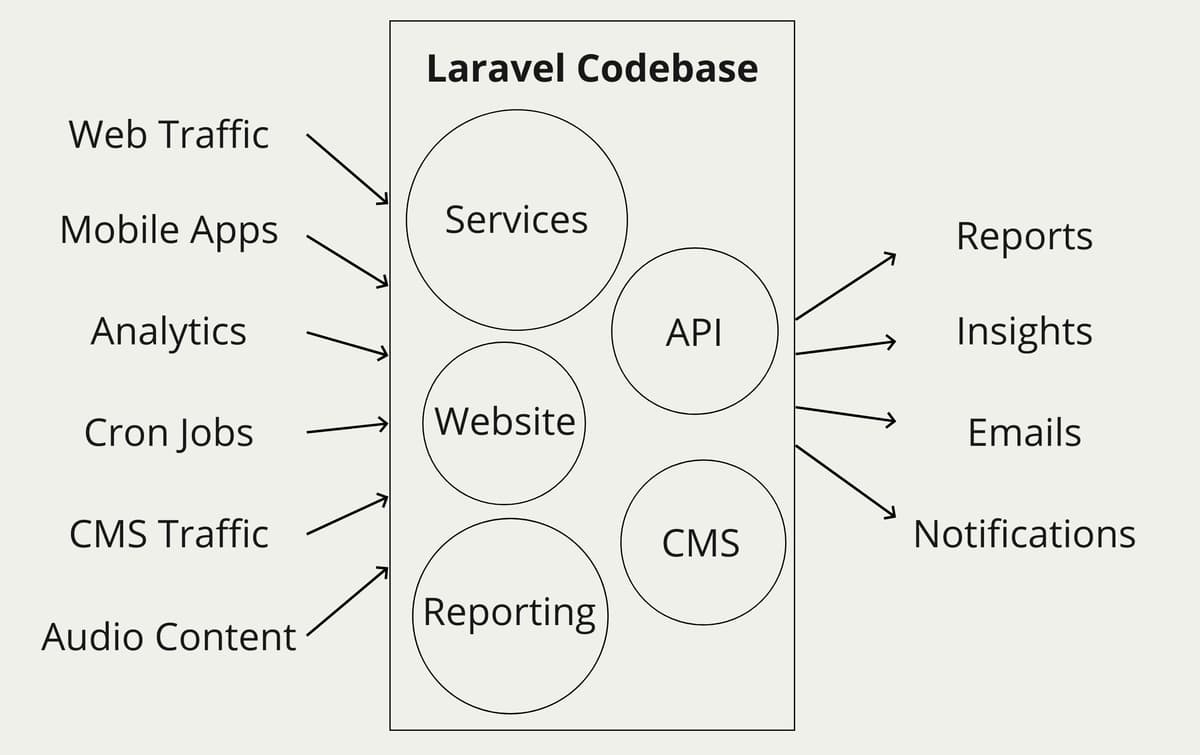

When the platform was first built it was envisaged as an MVP in order to test market fit. We built quickly in order to validate, and we reacted to both validations and invalidation by changing the ways in which that platform functioned - sometimes drastically. All of these changes and iterations were undertaken in a time critical context. Technical debt grew over the time and wasn't addressed as often as a good developer would advise. Over time the codebase grew into something that can only be described as an ugly monolith; a single Laravel codebase chock full of cross dependencies, 'temporary' hacks and legacy code.

But it's important to note that what we had, and still use up to today, works. It performs its core functions with few problems other than an occasional spike in database CPU that we haven't managed to track down yet. So why put ourselves through the trouble of changing anything? Developer velocity slowed way down, and feedback suggested that this was simply because they were afraid of breaking things. The project started out with a significant test suite which over time had been abandoned due to fixed deadlines and architecture changes which caused them to require significant refactoring. Feature development wasn't fun any more and this was a problem as Now were beginning to internalise their development efforts rather than rely on third parties for development resource.

The straw that broke the camel's back was the rather innocuous task of integrating Amazon in app purchases. The quickest and safest way to achieve this is by making use of pre-built packages which are able to validate purchases which are sent to the back end to be checked once a user has subscribed on their device. This is exactly how we handled iOS and Android subscriptions at the time. However, upon attempting to composer require the appropriate packages we hit an unfortunate situation: the packages requires a modern version of Guzzle (an HTTP req. library), but our ageing framework Laravel 4.2 required an old version of it. For those unfamiliar with PHP dependency management this is a bad thing - there's no way to require two versions of the same package side-by-side. To integrate the packages we needed to easily implement Amazon IAPs we'd have to update to Laravel 5 - a whole codebase refactor.

We hacked our way around the problem in the end, but the damage was done - trust was lost in our disheveled monolith and we started looking for new ideas.

Boromir has a point and has obviously been reading the writings of @spolsky, but the decision had to be made in the context of what we saw as two options:

- Hire a new dev team and teach them everything about an old platform which was fraying around the edges and would immediately hinder their ability to iterate rapidly.

- Hire a new dev team and task them with replacing the old platform under the guidance of previous developers who had knowledge of the lessons learnt from developing the old platform.

After some deliberation with management about the pros and cons it was decided that, for the sake of the future dev team, we'd go with option 2.

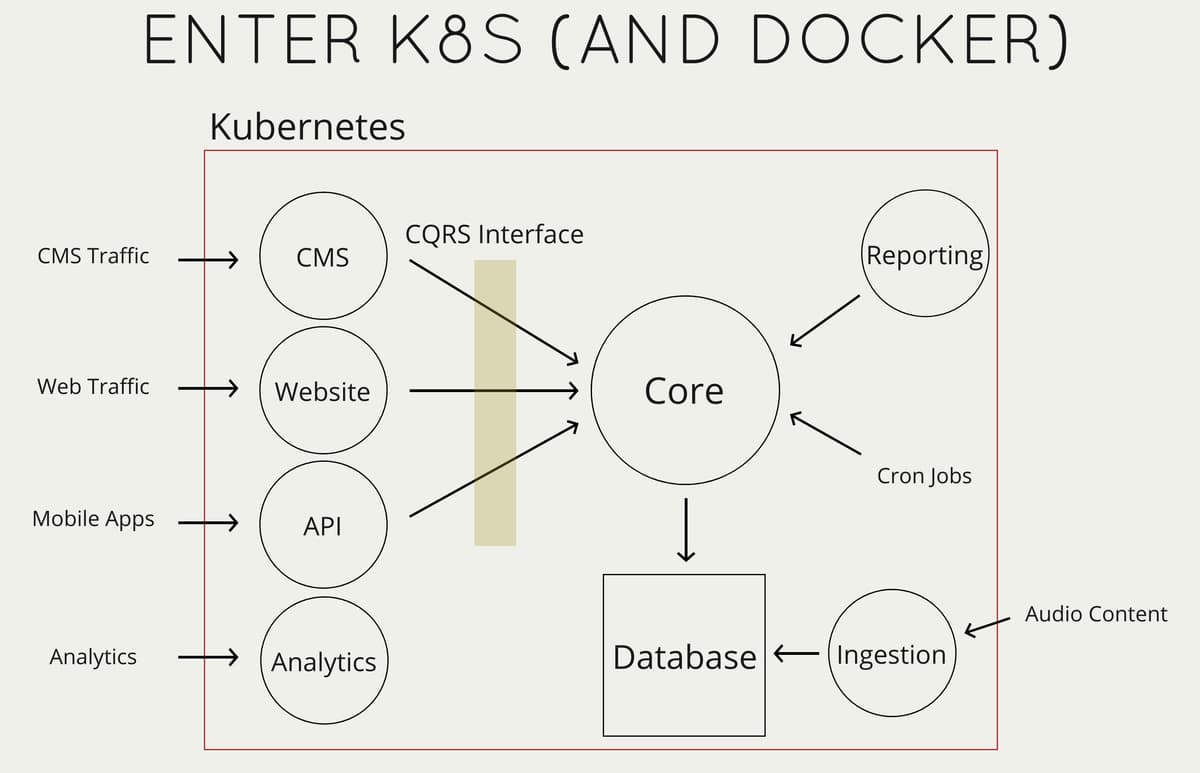

So I was tasked with coming up with something better than had gone before and, like any respectable developer who knows enough to be dangerous, I decided the best bet would be to shove some Docker and Kubernetes into the mix.

The general idea was to document and codify the business logic of the app into a set of Commands and Queries, similar in concept to the CQRS pattern which is oh so popular in .NET land, but with only 10% of the practical complexity. Commands and Queries (cumulatively: Actions) would be created and iterated upon until they covered all of the required functionality of the business domain in as simple as way as possible. This Core service would then be created in order to process these command and queries - they would also pass through a command bus which would be able to apply cross cutting concerns both before and after each Action execution. Only Core would be allowed to talk to the database in order to ensure only the agreed, validated and tested set of business interactions were able to alter any state within the platform.

By taking this approach we would be building a pristine representation of the business with no need to concern ourselves with how the outside world might interact with it. Processing user interaction would be left up to the other services - website, API etc. I guess you could think of the user facing services as the V and C from MVC, which would make Core the model - but shared between multiple front ends and protected by a super strict, very well tested interface.

The plan seemed good so we started building, and the more I built the better it felt. The Core service fell into place quickly. The strict CQRS interface in combination with an updated dev process allowed close to 100% test coverage at all times.

As Now's internal developers came on-board a combination of forward planning, GitLab, peer reviews, a comprehensive CI setup and using docker to encapsulate both the services themselves and our development environments has allowed us to maintain a rapid output with a very small team.

But the talk associated with this post is being presented at a Laravel meetup, and so far all I've done it bitch about technical debt and show off some fancy new architecture. So I'd better talk a little about a couple of things specific to Laravel about which we were pleasantly surprised.

The Authentication Problem

By splitting our platform into multiple services (each an independent Laravel codebase) we needed to figure out a way to allow users to authenticate via any of the user facing ones, most notably the Website and API. We also had some additional requirements:

- Service responsibilities should be well defined and segreagated

- Only Core should be making any changes to the database

- We'd like to use Laravel's built-in authentication abstractions to allow new devs to onboard easily and ensure compatibility with other Laravel libraries.

Luckily for us it turns out the Laravel's session based auth (which we wanted to use in the website) uses an interface called UserProvider which is responsible for exchanging user credentials for a user object. The standard implementation of this interface is the DatabaseUserProvider which simply pulls a row from the database and hydrates a User eloquent model. This implementation is resolved via the IoC container so it's also trivially easy for us to replace it: all we needed to do was create our own implementation which sent the user's credential's to Core and parse the response into a User object instead. Once this alternative implementation is ready it can be swapped for the default one. Easy.

We also wanted to make use of Laravel Passport for our API as it would be familiar to most Laravel developers. Just like the session auth it uses the UserProvider interface which we'd already replaced in the website codebase. Copy and paste that over to API and it works a treat. We did hit one snag here though... Passport is hard coded to assume it can store oAuth tokens in a database, but we didn't want to provision a new database just for oAuth tokens, not when we already had a Redis cluster which we were usign to store website session tokens. In order to overwrite this functionality we had to follow the class dependency tree up about 6 levels to find the first class which was being resolved by the IoC container. We then were able to subclass each of these six classes, force them to reference our subclasses down the dependency tree, until finally we were able to inject our custom implementation of oAuth token storage.

I guess Taylor didn't build everything to an interface.

Environment Specific Services

We need to run our platform in multiple environments: dev, CI, staging, production and possibly others. In each of these environments we need to be able to make adjustments to the way our codebases function, especially when communicating with third party APIs. A good example of this is Stripe. In local dev and Staging we want to be able to contact Stripe's testing environment. In CI we want to stub it out completely so that our tests to run super quickly. In production we obviously want to talk to Stripe's production servers. This required flexibility also extends to internal functionality, for instance we don't want the staging environment to be sending financial reports out to our partners every week!

The first step we used to achieve this is something that is often recommended as a general practice: build to an interface. By creating interface wrappers around both internal and external components we are able to create multiple different implementations which abide by that interface, and can slot them into our codebase as appropriate. For instance we have built our platform's secrets management component as an interface with two concrete implementations: loading secrets from a flat file and loading secrets from AWS Secrets Manager. If we were to move to Google Cloud for some reason swapping out the way we manage secrets would be trivial - just create a new implementation of the interface.

We also make heavy use of Laravel's IoC container in order to inject all of our interfaces into the classes that require them. This removes any knowledge from these dependent classes regarding which implementation they are actually loading. It also gives us a central location (this IoC container) to swap between our different interface implementations.

With this setup the only thing left is to actually inform the IoC container as to what implementations to actually use. Our solution is to place this information in the codebase's environment using ENV vars. This allows us to again decouple any logic regarding which concrete interface implementation to use from the codebase and place it at the infrastructure level which was our original goal in the first place; different functionality in different runtime environments. We can actually define the ENV vars inside a docker container (which we're using for all services remember) at runtime so we can trivially spin up different copies of the same codebase with different functionality.

Kubernetes actually takes this a step further by automatically reprovisioning running containers whenever the ENV config is adjusted. This is super useful in a scaled environment as it allows us to swap code implementations in a live service with multiple running containers and not worry about adjusting them all individually.

All this flexibility has given us the option to do a few cool things:

- Push new code to production behind an interface and activate it across the cluster with a small config change.

- Roll out code behind an interface gradually by telling Kubernetes to roll out the ENV change gradually.

- Create a staging environment which is identical to production with the exception of ENV vars and still be able to run significantly different code when interacting with third parties.

- Stub out interaction with all external services during CI tests and optionally during local dev.

Overall the developer experience has improved ten-fold and productivity has so far remained very high. We've completed three of our new dockerized services so far, I guess time will tell whether or not we chose the correct path by choosing to re-build and also introducing K8s to the mix.